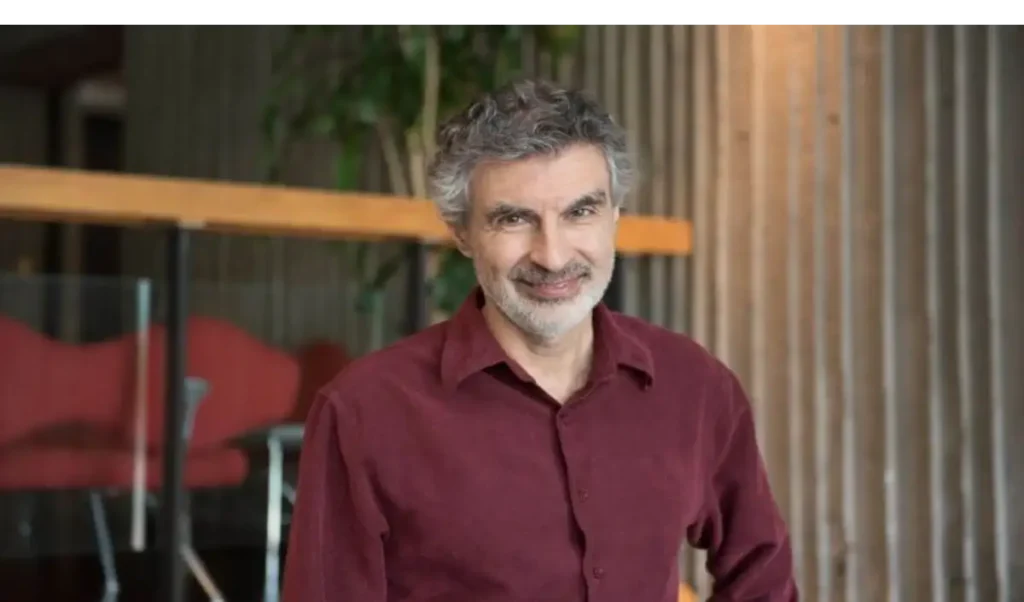

Yoshua Bengio, a prominent figure in the field of artificial intelligence, has recently expressed concerns regarding the reliability and truthfulness of AI chatbots. He highlights that while these systems have made significant advancements in natural language processing, they still struggle with accurately representing factual information. AI chatbots often generate responses based on patterns in the data they were trained on, which can lead to the dissemination of misinformation or inaccuracies. This lack of truthfulness raises important ethical questions about the use of AI in communication and information dissemination.

Bengio emphasizes that the challenge lies not only in the technology itself but also in the underlying datasets that AI models are trained on. If these datasets contain biases or misinformation, the resulting chatbot responses will reflect those flaws. As a result, users may unknowingly accept false information as truth, which can have serious implications in various sectors, including education, healthcare, and politics. The responsibility falls on developers and researchers to create more robust frameworks that ensure the integrity of the information provided by AI systems.

Moreover, the issue of truth in AI chatbots extends beyond mere accuracy; it also involves the ethical implications of their use. As these technologies become more integrated into daily life, the potential for misuse grows. For instance, AI chatbots could be exploited to spread propaganda or manipulate public opinion. Bengio advocates for a more conscientious approach to AI development, where transparency, accountability, and truthfulness are prioritized. This involves not only improving the algorithms but also fostering a better understanding among users about the limitations of AI systems.

In summary, Yoshua Bengio’s insights shed light on a critical aspect of AI development—the need for truthfulness in chatbots. As we continue to advance in this field, it is essential to address the challenges posed by misinformation and biases in AI-generated content. By prioritizing ethical considerations and enhancing the accuracy of AI systems, we can work towards a future where technology serves as a reliable source of information, fostering trust and understanding in our increasingly digital world.